Leveraging Google job search technologies

As a job portal website, our job search feature is a key part of our overall user experience. When users search for jobs, they can engage more with the platform, find what they are looking for, and eventually sign up and/or buy one of our products.

Our job search feature is a custom implementation built on top of ElasticSearch that has been working very well for the last few years. Nonetheless, to make sure we stay ahead in the game of job search we are exploring ways to enhance our search tools.

One option in consideration is to replace our ElasticSearch implementation with Google’s Cloud Talent Solution. This service takes advantage of artificial intelligence technologies to deliver the best results.

Queues, background jobs, API integration, metrics, A/B testing, monitoring, legacy code… with such a diverse set of tech ingredients in the project, we thought it would be an interesting case to share. Let’s examine the approach we took and have an overview of the architecture to implement it.

How Google CTS works

In a nutshell, first you push all of your job base to CTS through their REST API. Later, you query your data with text and various optional filters such as location or salary range. Google seems to have done a good job at modeling the job search. One can see the API entities are well designed and very specific to the domain of job searches. The API also accepts feedback of usage information, which is used by the AI machine to improve the quality of our future search results.

From the beginning, the plan was to integrate CTS with our job platform in a way we could A/B test the performance of the current home-baked ElasticSearch implementation against Google CTS. With the results in hand we could then decide which one to keep.

Architecture

The system can be split roughly into three main components:

-

Job base indexing: a background process to push our job base data to Google CTS (around 400,000 currently active jobs from a total of 20 million).

-

Frontend integration: forwards search queries to CTS and display results to users.

-

Feedback user actions: feeds CTS’s artificial intelligence with usage information from our clients behaviours.

Below we review each of these in detail.

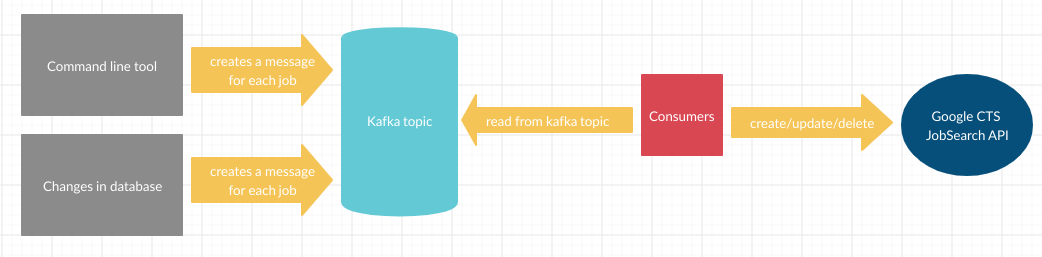

Job base indexing

The job base indexing background job can be triggered in two different ways: [1] whenever a job gets updated in the database a message is queued in kafka which triggers subsequent processing or [2] someone runs a terminal command with specific parameters to sync a group of job records.

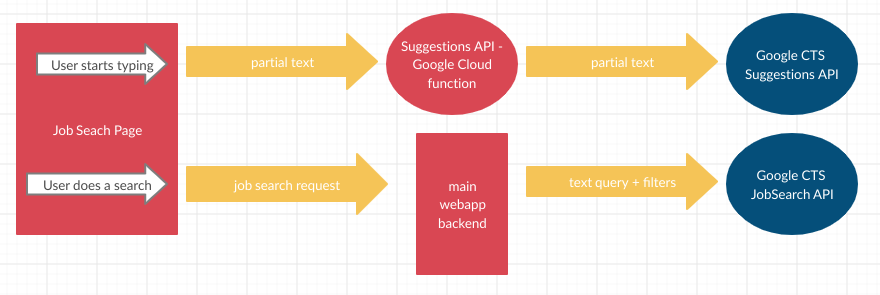

Frontend integration

The frontend integration uses two APIs: the autocomplete suggestions API and the job search API. For every keystroke on the text field, we fire a request to Google’s suggestions API. We use a Google Cloud Function to proxy those calls because credential requirements prevent us from calling the API directly.

For the job search queries, we directly request our main application and, from there, the main app synchronously queries the Google CTS API.

Feedback users actions

We already had a system in place to track important user actions. It pushes messages to a Kafka topic regarding events such as job posts displayed, job application button clicks, and searches performed by users. All we had to do to send this information to Google’s API was to spin up some Kafka consumers in the background. These consumers read messages from the topics, change the data to a suitable format, and push the events to Google’s API.

Performance analysis

Comparing search engines is not a trivial task. For that we thought deeply about the goals of our job search feature and what metrics we could use to evaluate it. We established a number of key performance indicators (KPI), amongst which are the ones we are most interested in:

-

Number of zero-click searches

Sometimes our users perform searches and don’t click anything. Maybe they realized they could apply an additional search filter, or Maybe the are not very motivated by the results. Whatever the reasons might be, we see significant difference between the two engines. In the first page of the results, roughly 90% of CTS daily searches have no clicks, against only 80% for ES.

-

Click / search ratio

The ratio between the number of searches and the number of clicks. Alone it might not be very meaningful. Is more clicks better? Maybe it means users are more engaged (which would be better), or maybe they have to work harder to find what they are looking for (which would be worse). In our case, we see users exposed to ES results click significantly more then the ones exposed to CTS. This information is not so meaningful on its own, but will be very useful when paired with other KPI’s we are still collecting.

-

Total number of job applications

It is fair to assume that the search engine with the best results would result in more of our users applying for jobs. This would be a good example of an objective indicator. We are still collecting the data and have no conclusions yet.

Conclusion

We are still running the A/B test to collect enough performance and user behavior data to make a decision. Naturally, we are not going to base our decision solely on performance. We will also factor in other aspects such as support from the vendors and direct costs as well indirect costs like server maintenance.