How to create an internal google cloud load balancer ressource on terraform

How to create a google cloud internal load balancer ressource on terraform

Little dictionary

- MIG : Managed Instance Group

- ILB : Internal Load Balancer

- GCP : Google Cloud Platform

Abstract

When I first wanted to use an internal load balancer on GCP with terraform, I figured out the official documentation was a bit blurry and confusing. My first thought was that the internal load balancer was either a special router or a special resource. Obviously it is more complex. This is what I will try to clarify in this post.

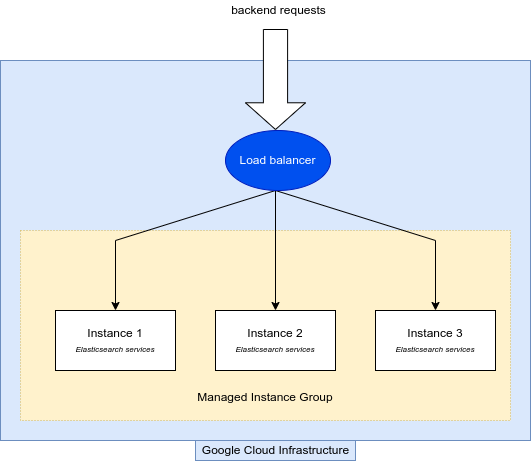

First here is what I want to do :

I want a backend application to access an elasticsearch cluster hosted in a MIG on google cloud. So I want to be able to reach one MIG containing multiple instances through only one IP address so I can create and delete instances without having to change this IP. Moreover I want the http requests to be spread to the instances inside the MIG. I don’t want to have only one instance receiving requests since all nodes in the cluster are masters and should have all the same workload.

The theory

So how to set up this internal load balancer ? Either with terraform or google cloud graphic interface, there are a bunch of ressources you will have to create. ILB is made of a proxy, a backend and frontend ressources. You will need some complementary infrastructure like subnetwork or firewall rules you may already have created.

Here is the networking architecture I needed to have load balancing :

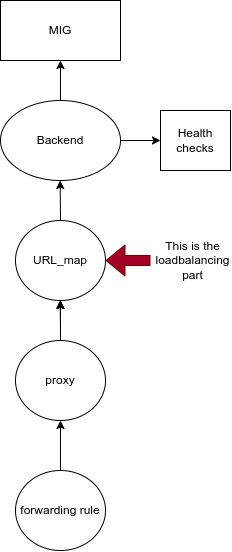

Google Cloud documentation schema of ILB infrastructure and process :

As we said, this image represents what we can call a load balancer.

- Forwarding rules will forward packets outcoming from an instance inside google project to give it to a proxy.

- We need to have one proxy per region we want to balance. The proxy will give a unique IP address and data caching for one or multiple backend services.

- URL map will redirect the packets to the appropriate backend.

- Backend service is a logical resource which will be useful to specify how the data will spread on the instances it is linked to. For example, you can specify a basic packet spreading with ROUND ROBIN which will spread packets to one machine after one machine and start again when it reaches the last machine which didn’t have a packet yet.

In practise

We obviously need to industrialise this process so everything shoud be in IaC (Infrastructure as Code). So how to reproduce such an architecture on terraform ?

On Terraform it looks like this :

resource "google_compute_region_backend_service" "default" {

project = var.projectName

name = "some-mig-http-backend"

region = var.region

health_checks = [google_compute_health_check.es_ping_autohealing.id]

protocol = "HTTP"

load_balancing_scheme = "INTERNAL_MANAGED"

locality_lb_policy = "ROUND_ROBIN"

port_name = "some-port"

backend {

group = google_compute_region_instance_group_manager.some_mig.instance_group

balancing_mode = "UTILIZATION"

capacity_scaler = 1.0

}

}

resource "google_compute_region_url_map" "default" {

project = var.projectName

name = "some-internal-http-loadbalancer"

provider = google-beta

region = var.region

default_service = google_compute_region_backend_service.default.id

}

resource "google_compute_region_target_http_proxy" "default" {

project = var.projectName

name = "some-http-proxy"

provider = google-beta

region = var.region

url_map = google_compute_region_url_map.default.id

}

resource "google_compute_forwarding_rule" "google_compute_forwarding_rule" {

provider = google-beta

project = var.projectName

name = "some-http-internal-lb-frontend"

region = var.region

ip_protocol = "TCP"

load_balancing_scheme = "INTERNAL_MANAGED"

port_range = "80"

target = google_compute_region_target_http_proxy.default.id

network = var.vpc_id

subnetwork = var.subnet_public_id

network_tier = "PREMIUM"

}

This is what it is looking in simplify terraform module schema :

Each row is a ressource pointing to an another. We can fake a request path on this logical terraform schema : a request comming from our app backend has been send to our service. The entrypoint will be the forwarding rule which is handling the IP address of the service. Forwarding rule will forward the request to the needed proxy (if you have multiple regional proxy) which will forward the request to the URL_map. The URL_map is the important ressource in the load balancer infrastructure because it is the ressource which will decide from URL to which instance to send the request. The load balancing action is here.

What is confusing with google cloud load balancing ?

The confusing part is that load balancing feature is brought on terraform by url_map ressource and few modification of other ressources but GCP the load balancing action is handle by the backend.

url_map is like a plugin ressource you add to your backend when you want to switch to load balancing.

So on Terraform, moreover the url_map, you need to modify the backend with those lines

load_balancing_scheme = "INTERNAL_MANAGED"

locality_lb_policy = "ROUND_ROBIN"

We can chose the request spreading strategy betweem ROUND-ROBIN, LEAST_REQUEST or RING_HASH (cf terraform GCP documentation).

Now your backend can support the URL_map and vice versa. Well done, you just created a loadbalancer on GCP with Terraform !